Hadoop has come up in a few conversations I've had in the last few days, and it's occurred to me that the supercomputing community continues having a difficult time fully understanding how Hadoop currently fits (and should fit) into scientific computing. HPCwire was kind enough to run a piece that let me voice my perspective of the realities of Hadoop use in HPC a few months ago--that is, scientists are still getting a feel for Hadoop and what it can do, and it just isn't seeing widespread adoption in scientific computing yet. This contrasts with the tremendous buzz surrounding the "Hadoop" brand and ultimately gives way to strange dialogue, originating from the HPC side of the fence, like this:

I'm not sure if this original comment was facetious and dismissive of the Hadoop buzz or if it was a genuinely interested observation. Regardless of the intent, both interpretations reveal an important fact: Hadoop is being taken seriously only at a subset of supercomputing facilities in the US, and at a finer granularity, only by a subset of professionals within the HPC community. Hadoop is in a very weird place within HPC as a result, and I thought it might benefit the greater discussion of its ultimate role in research computing if I laid out some of the factors contributing to Hadoop's current awkward fit. The rest of this post will strive to answer two questions: Why does Hadoop remain at the fringe of high-performance computing, and what will it take for it to be a serious solution in HPC?

I gave two MapReduce-related consultations this past month which really highlighted how this evolutionary path of Hadoop (and MapReduce in general) is not serving HPC very well.

My first meeting was with a few folks from a large clinical testing lab that was beginning to to incorporate genetic testing into their service lineup. They were having a difficult time keeping up with the volume of genetic data being brought in by their customers and were exploring Hadoop BLAST as an alternative to their current BLAST-centric workflow. The problem, though, is that Hadoop BLAST was developed as an academic project when Hadoop 0.20 (which has evolved into Hadoop 1.x) was the latest and greatest technology. Industry has largely moved beyond Hadoop version 1 onto Hadoop 2 and YARN, and this lab was having significant difficulties in getting Hadoop BLAST to run on their brand new Hadoop cluster because its documentation hasn't been updated in three years.

The other meeting was with a colleague who works for a multinational credit scoring company. They were deploying Spark on their Cloudera cluster with the aforementioned clinical testing company: their data collection processes were outgrowing their computational capabilities and they were exploring better alternatives for data exploration. The problem they encountered was not one caused by their applications being frozen in time after someone finished their Ph.D.; rather, their IT department had botched the Spark installation.

I'm not sure if this original comment was facetious and dismissive of the Hadoop buzz or if it was a genuinely interested observation. Regardless of the intent, both interpretations reveal an important fact: Hadoop is being taken seriously only at a subset of supercomputing facilities in the US, and at a finer granularity, only by a subset of professionals within the HPC community. Hadoop is in a very weird place within HPC as a result, and I thought it might benefit the greater discussion of its ultimate role in research computing if I laid out some of the factors contributing to Hadoop's current awkward fit. The rest of this post will strive to answer two questions: Why does Hadoop remain at the fringe of high-performance computing, and what will it take for it to be a serious solution in HPC?

#1. Hadoop is an invader

I think what makes Hadoop uncomfortable to the HPC community is that, unlike virtually every other technology that has found successful adoption within research computing, Hadoop was not designed by HPC people. Compare this to a few other technologies that are core to modern supercomputing:- MPI was literally born at the world's largest supercomputing conference, and the reference was developed by computer scientists major universities and national labs. It was developed by scientists for scientists.

- OpenMP was developed by an industrial consortium comprised of vendors of high-performance computing hardware and software. Like MPI, this standard emerged as a result of vendor-specific threading APIs causing compatibility nightmares across different high-end computing platforms.

- CUDA was developed out of Brook which was developed by computer scientists at Stanford. Again, CUDA now is largely targeted at high-performance computing (although this is changing--and it'll be interesting to see if adoption outside of HPC really happens)

By contrast, Hadoop was developed by Yahoo, and the original MapReduce was developed by Google. They were not created to solve problems in fundamental science or national defense; they were created to provide a service for the masses. They weren't meant to interface with traditional supercomputers or domain scientists; Hadoop is very much an interloper in the world of supercomputing.

The notion that Hadoop's commercial origins make it contentious for stodgy people in the traditional supercomputing arena may sound silly without context, but the fact is, developing a framework for a commercial application rather than a scientific application leaves it with an interesting amount of baggage.

#2. Hadoop looks funny

The most obvious baggage that Hadoop brings with it to HPC is the fact that it is written in Java. One of the core design features of the Java language was to allow its programmers to write code once and be able to run it on any hardware platform--a concept that is diametrically opposite to the foundations of high-performance computing, where code should be compiled and optimized for the specific hardware on which it will run. Java made sense for Hadoop due to its origins in the world of web services, but Java maintains a perception of being slow and inefficient. Slow and inefficient codes are, frankly, offensive to most HPC professionals, and I'd wager than a majority of researchers in traditional HPC scientific domains simply don't know the Java language at all. I sure don't.

The idea of running Java applications on supercomputers is beginning to look less funny nowadays with the explosion of cheap genome sequencing. Some of the most popular foundational applications in bioinformatics (e.g., GATK and Picard) are written in Java, and although considered an "emerging community" within the field of supercomputing, bioinformatics is rapidly outgrowing the capabilities of lab-scale computing. Perhaps most telling is Intel's recent contributions to GATK which facilitate much richer use of AVX operations for variant calling.

With that being said though, Java is still a very strange way to interact with a supercomputer. Java applications don't compile, look, or feel like normal applications in UNIX as a result of their cross-platform compatibility. Its runtime environment exposes a lot of very strange things to the user for no particularly good reason (-Xmx1g? I'm still not sure why I need to specify this to see the version of Java I'm running, much less do anything else) and it doesn't support shared-memory parallelism in an HPC-oriented way (manual thread management, thread pools...yuck). For the vast majority of HPC users coming from traditional domain sciences the professionals who support their infrastructure, Java applications remain unconventional and foreign.

With that being said though, Java is still a very strange way to interact with a supercomputer. Java applications don't compile, look, or feel like normal applications in UNIX as a result of their cross-platform compatibility. Its runtime environment exposes a lot of very strange things to the user for no particularly good reason (-Xmx1g? I'm still not sure why I need to specify this to see the version of Java I'm running, much less do anything else) and it doesn't support shared-memory parallelism in an HPC-oriented way (manual thread management, thread pools...yuck). For the vast majority of HPC users coming from traditional domain sciences the professionals who support their infrastructure, Java applications remain unconventional and foreign.

#3. Hadoop reinvents HPC technologies poorly

For those who have taken a serious look at the performance characteristics of Hadoop, the honest truth is that it re-invents a lot of functionality that has existed in HPC for decades, and it does so very poorly. Consider the following examples:

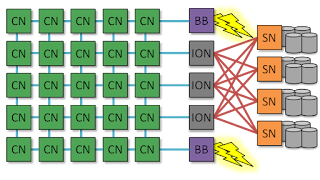

- Hadoop uses TCP with a combination of REST and RPC for inter-process communication. HPC has been using lossless DMA-based communication, which provides better performance in all respects, for years now.

- Hadoop doesn't really handle multi-tenancy and its schedulers are terrible. The architecture of Hadoop is such that, with a 3x replication factor, a single cluster can only support three concurrent jobs at a time with optimal performance. Its current scheduler options have very little in the way of intelligent, locality-aware job placement.

- Hadoop doesn't support scalable interconnect topologies. The rack-aware capabilities of Hadoop, while powerful for their intended purpose, do not support scalable network topologies like multidimensional meshes and toruses. They handle Clos-style network topologies, period.

- HDFS is very slow and very obtuse. Parallel file systems like Lustre and GPFS have been an integral part of HPC for years, and HDFS is just very slow and difficult to use by comparison. The lack of a POSIX interface means getting data in and out is tedious, and its vertical integration of everything from replication and striping to centralized metadata in Java makes it rather unresponsive.

However, these poor reinventions are not the result of ignorance; rather, Hadoop's reinvention of a lot of HPC technologies arises from reason #1 above: Hadoop was not designed to run on supercomputers and it was not designed to fit into the existing matrix of technologies available to traditional HPC. Rather, it was created to interoperate with web-oriented infrastructure. Specifically addressing the above four points,

- Hadoop uses TCP/IP and Ethernet because virtually all data center infrastructure is centered around these technologies, not high-speed RDMA. Similarly, REST and RPC are used across enterprise-oriented services because they are simple protocols.

- Multi-tenancy arises when many people want to use a scarce resource such as a supercomputer; in the corporate world, resources should never be a limiting factor because waiting in line is what makes consumers look elsewhere. This principle and the need for elasticity is what has made the cloud so attractive to service providers. It follows that Hadoop is designed to provide a service for a single client such as a single search service or data warehouse.

- Hadoop's support for Clos-style (leaf/spine) topologies models most data center networks. Meshes, toruses, and more exotic topologies are exclusive to supercomputing and had no relevance to Hadoop's intended infrastructure.

- HDFS implements everything in software to allow it to run on the cheapest and simplest hardware possible--JBODs full of spinning disk. The lack of a POSIX interface is a direct result of Hadoop's optimization for large block reads and data warehousing. By making HDFS write-once, a lot of complex distributed locking can go out the window because MapReduce doesn't need it.

This loops back around to item #1 above: Hadoop came from outside of HPC, and it carries this baggage with it.

#4. Hadoop evolution is backwards

A tiny anecdote

I gave two MapReduce-related consultations this past month which really highlighted how this evolutionary path of Hadoop (and MapReduce in general) is not serving HPC very well.

My first meeting was with a few folks from a large clinical testing lab that was beginning to to incorporate genetic testing into their service lineup. They were having a difficult time keeping up with the volume of genetic data being brought in by their customers and were exploring Hadoop BLAST as an alternative to their current BLAST-centric workflow. The problem, though, is that Hadoop BLAST was developed as an academic project when Hadoop 0.20 (which has evolved into Hadoop 1.x) was the latest and greatest technology. Industry has largely moved beyond Hadoop version 1 onto Hadoop 2 and YARN, and this lab was having significant difficulties in getting Hadoop BLAST to run on their brand new Hadoop cluster because its documentation hasn't been updated in three years.

The other meeting was with a colleague who works for a multinational credit scoring company. They were deploying Spark on their Cloudera cluster with the aforementioned clinical testing company: their data collection processes were outgrowing their computational capabilities and they were exploring better alternatives for data exploration. The problem they encountered was not one caused by their applications being frozen in time after someone finished their Ph.D.; rather, their IT department had botched the Spark installation.

Generally speaking, the evolution of all technologies at the core of HPC follow a similar evolutionary path into broad adoption. Both software and hardware technologies arise as disparities between available and necessary technologies widen. Researchers often hack together non-standard solutions to these problems until a critical mass is achieved, and a standard technology emerges to fill the gap. OpenMP is a great example--before it became standard, there were a number of vendor-specific pragma-based multithreading API; Cray, Sun, and SGI's all had their own versions that did the same thing but made porting codes between systems very unpleasant. These vendors ultimately all adopted a standard interface which became OpenMP, and that technology has been embraced because it provided a portal way of solving the original motivating problem.

The evolution of Hadoop has very much been a backwards one; it entered HPC as a solution to a problem which, by and large, did not yet exist. As a result, it followed a common, but backwards, pattern by which computer scientists, not domain scientists, got excited by this new toy and invested a lot of effort into creating proof of concept codes and use-cases. Unfortunately, this sort of development is fundamentally unsustainable by itself, and as the shine of Hadoop wore off, researchers moved on to the next big thing and largely abandoned these model applications. This has left a graveyard of software, documentation, and ideas that are frozen in time and rapidly losing relevance as Hadoop moves on.

Consider this evolutionary path of Hadoop compared to OpenMP: there were no OpenMP proofs-of-concept. There didn't need to be any; the problems had already been defined by the people who needed OpenMP, so by the time OpenMP was standardized and implemented in compilers, application developers already knew where it would be needed.

Not surprisingly, the innovation in the Hadoop software ecosystem remains where it was developed: data warehousing and data analytics.

I'll say up front that there are no easy answer--if there were, I wouldn't be delivering this monologue. However, solutions are being developed and attempted to address a few of the four major barriers I outlined above.

This is not to say that the data-oriented problems at which Hadoop excels do not exist within the domain sciences. Rather, there are two key roles that Hadoop/MapReduce will play in scientific computations:

Unfortunately, reimplementing MapReduce inside the context of existing HPC paradigms represents a large amount of work for a relatively small subset of problems.

In addition to incorporating these software technologies from HPC into Hadoop, there are some really innovative things you can do with hardware technologies that make Hadoop much more appealing to traditional HPC. I am working on some exciting and innovative (if I may say so) hardware designs that will further lower the barrier between Hadoop and HPC, and with any luck, we'll get to see some of these ideas go into production in the next few years.

With that all being said, there's room for improvement in making Hadoop less weird. Spark is an exciting project because it sits at a nice point between academia and industry; developed at Berkeley but targeted directly at Hadoop, it feels like it was developed for scientists, and it treats high-performance as a first-class citizen by providing the ability to utilize memory a lot more efficiently than Hadoop does. It also doesn't have such a heavy-handed Java-ness to it and provides a reasonably rich interface for Python (and R support is on the way!). There still are a lot of rough edges (this is where the academic origins shine through, I think) but I'm hopeful that it cleans up under the Apache project.

Perhaps more than (or inclusive of) the first two paths forward in increasing MapReduce adoption in research science, Spark holds the most promise in that it feels less like Hadoop and more normal from the HPC perspective. It doesn't force you to cast your problem in terms of a map and a reduce step; the way in which you interact with your data (your resilient distributed dataset, or RDD, in Spark parlance) is much more versatile and is more likely to directly translate to the logical operation you want to perform. It also supports the basic things Hadoop lacks such as iterative operations.

However, Hadoop and MapReduce aren't to be dismissed outright either. There are a growing subset of scientific problems that are growing against a scalability limit in terms of data movement, and at some point, solving these problems using conventional, CPU-oriented parallelism will reduce to using the wrong tool for the job. The key, as is always the case in this business, is to understand the job and understand that there are more tools in the toolbox than just a hammer.

As these data-intensive and data-limited problems gain a growing presence in traditional HPC domains, I hope the progress being made on making Hadoop and MapReduce more relevant to research science continues. I mentioned above that great progress is being made towards truly bridging the gap of utility and making MapReduce a serious go-to solution to scientific problems, and although Hadoop remains on the fringe of HPC today, it won't pay to dismiss it for too much longer.

The evolution of Hadoop has very much been a backwards one; it entered HPC as a solution to a problem which, by and large, did not yet exist. As a result, it followed a common, but backwards, pattern by which computer scientists, not domain scientists, got excited by this new toy and invested a lot of effort into creating proof of concept codes and use-cases. Unfortunately, this sort of development is fundamentally unsustainable by itself, and as the shine of Hadoop wore off, researchers moved on to the next big thing and largely abandoned these model applications. This has left a graveyard of software, documentation, and ideas that are frozen in time and rapidly losing relevance as Hadoop moves on.

Consider this evolutionary path of Hadoop compared to OpenMP: there were no OpenMP proofs-of-concept. There didn't need to be any; the problems had already been defined by the people who needed OpenMP, so by the time OpenMP was standardized and implemented in compilers, application developers already knew where it would be needed.

Not surprisingly, the innovation in the Hadoop software ecosystem remains where it was developed: data warehousing and data analytics.

How can Hadoop fit into HPC?

So this all is why Hadoop is in this awkward position, but does this mean Hadoop (and MapReduce) will never be welcome in the world of HPC? Alternatively, what would it take for Hadoop to become a universally recognized core technology in HPC?I'll say up front that there are no easy answer--if there were, I wouldn't be delivering this monologue. However, solutions are being developed and attempted to address a few of the four major barriers I outlined above.

Reimplement MapReduce in an HPC-oriented way

This idea has been tried in a number of different ways (see MPI MapReduce and Phoenix), but none have really gained traction. I suspect this is largely the result of one particular roadblock: there just aren't that many problems which are so burdensome in the traditional HPC space that reimplementing a solution in a relatively obscure implementation of MapReduce becomes worth the effort. As I mentioned in point #4 above, HPC vendors haven't been creating their own MapReduce APIs to address the demands of their customers, so Hadoop's role in HPC is not clearly addressing a problem that needs an immediate solution.This is not to say that the data-oriented problems at which Hadoop excels do not exist within the domain sciences. Rather, there are two key roles that Hadoop/MapReduce will play in scientific computations:

- Solving existing problems: The most activity I've seen involving Hadoop in domain sciences comes out of bioinformatics and observational sciences. Bioinformatics as a consumer of HPC cycles is still in its infancy, but the data sets being generated by next-generation sequencers are enormous--the data to describe a single human genome, even when compressed, takes up about 120 GB. Similarly, advances in imaging and storage technology have allowed astronomy and radiology to generate extremely large collections of data.

- Enabling new problems: One of Hadoop's more long-term promises is not solving the problems of today, but giving us a solution to problems we previously thought to be intractable. Although I can't disclose too much detail, an example of this lies in statistical mechanics: many problems involving large ensembles of particles have relied on data sampling or averaging to reduce the sheer volume of numerical information into a usable state. Hadoop and MapReduce allow us to start considering what deeper, more subtle patterns may emerge if a massive trajectory through phase space could be dumped and analyzed with, say, machine learning methods.

Unfortunately, reimplementing MapReduce inside the context of existing HPC paradigms represents a large amount of work for a relatively small subset of problems.

Incorporate HPC technologies in Hadoop

Rather than reimplementing Hadoop/MapReduce as an HPC technology, I think a more viable approach forward is to build upon the Hadoop framework and correct some of its poorly reinvented features I described in item #3 above. This will allow HPC to continuously fold in new innovations being developed in Hadoop's traditional competencies--data warehousing and analytics--as they become relevant to scientific problems. Some serious effort is being made to this end:- The RDMA for Apache Hadoop project, headed by the esteemed D.K. Panda and his colleagues at OSU, has replaced Hadoop's TCP/RPC communication modes with native RDMA with really impressive initial results.

- Some larger players in the HPC arena have begun to provide rich support for high-performance parallel file systems as a complete alternative to HDFS. IBM's GPFS file system has a file placement optimization (FPO) capability that allows GPFS to act as a drop-in replacement for HDFS, and Intel was selling native Lustre support before they sold IDH to Cloudera.

- I would be remiss if I did not mention my own efforts in making Hadoop provisioning as seamless as possible on batch-based systems with myHadoop

In addition to incorporating these software technologies from HPC into Hadoop, there are some really innovative things you can do with hardware technologies that make Hadoop much more appealing to traditional HPC. I am working on some exciting and innovative (if I may say so) hardware designs that will further lower the barrier between Hadoop and HPC, and with any luck, we'll get to see some of these ideas go into production in the next few years.

Make MapReduce Less Weird

The very nature of MapReduce is a very strange one to supercomputing--it solves a class of problems that the world's fastest supercomputers just weren't designed to solve. Rather than make raw compute performance the most important capability, MapReduce treats I/O scalability as the most important capability and CPU performance is secondary. As such, it will always be weird until such a day comes when science faces an equal balance of compute-limited and data-limited problems. Fundamentally, I'm not sure that such a day will ever come. Throwing data against a wall to see what sticks is good, but deriving analytical insight is better.With that all being said, there's room for improvement in making Hadoop less weird. Spark is an exciting project because it sits at a nice point between academia and industry; developed at Berkeley but targeted directly at Hadoop, it feels like it was developed for scientists, and it treats high-performance as a first-class citizen by providing the ability to utilize memory a lot more efficiently than Hadoop does. It also doesn't have such a heavy-handed Java-ness to it and provides a reasonably rich interface for Python (and R support is on the way!). There still are a lot of rough edges (this is where the academic origins shine through, I think) but I'm hopeful that it cleans up under the Apache project.

Perhaps more than (or inclusive of) the first two paths forward in increasing MapReduce adoption in research science, Spark holds the most promise in that it feels less like Hadoop and more normal from the HPC perspective. It doesn't force you to cast your problem in terms of a map and a reduce step; the way in which you interact with your data (your resilient distributed dataset, or RDD, in Spark parlance) is much more versatile and is more likely to directly translate to the logical operation you want to perform. It also supports the basic things Hadoop lacks such as iterative operations.

Moving Forward

I think I have a pretty good idea about why Hadoop has received a lukewarm, and sometimes cold, reception in HPC circles, and much of these underlying reasons are wholly justified. Hadoop's from the wrong side of the tracks from the purists' perspective, and it's not really changing the way the world will do its high-performance computing. There is a disproportionate amount of hype surrounding it as a result of its revolutionary successes in the commercial data sector.However, Hadoop and MapReduce aren't to be dismissed outright either. There are a growing subset of scientific problems that are growing against a scalability limit in terms of data movement, and at some point, solving these problems using conventional, CPU-oriented parallelism will reduce to using the wrong tool for the job. The key, as is always the case in this business, is to understand the job and understand that there are more tools in the toolbox than just a hammer.

As these data-intensive and data-limited problems gain a growing presence in traditional HPC domains, I hope the progress being made on making Hadoop and MapReduce more relevant to research science continues. I mentioned above that great progress is being made towards truly bridging the gap of utility and making MapReduce a serious go-to solution to scientific problems, and although Hadoop remains on the fringe of HPC today, it won't pay to dismiss it for too much longer.

.png)

_by_Resource_2008-01-01_to_2013-01-01_timeseries+(1).png)